[ad_1]

Whether you’ve launched a redesign of your website or introduced a new feature in your app, this is usually the point at which people move on to the next project. But that’s a mistake.

Only when a website, app or feature goes live can we see actual users interacting with it in a completely natural way. Only then will we know whether it was successful or not.

Not that things are ever so black and white. Even if it seems successful, there is always room for improvement. This is especially true for Conversion rate optimization. Even small optimizations can lead to significant increases in sales, leads, or other important metrics.

Would you like to find out more about it? Testing and improving your website? Join Paul Boag at his upcoming live workshop on the topic Fast and affordable user research and testingfrom July 11th.

Take your time in the post-launch iteration

The key is to plan ahead for post-launch optimization right from the start. When defining the timeline of your project or sprint, don’t equate the start with the end. Instead, schedule the launch of the new website, app, or feature to be about two-thirds of the way through your timeline. This leaves time for monitoring and iteration after launch.

Better yet, divide your team’s time into two workflows. One would focus on “innovation” – introducing new features or content. The second focus would focus on “optimizing” and improving what is already online.

In short: Do everything you can to allow at least some time after release to optimize the experience.

Once you’ve done this, you can begin to identify areas on your website or app that are underperforming and could be improved.

Identify problem points

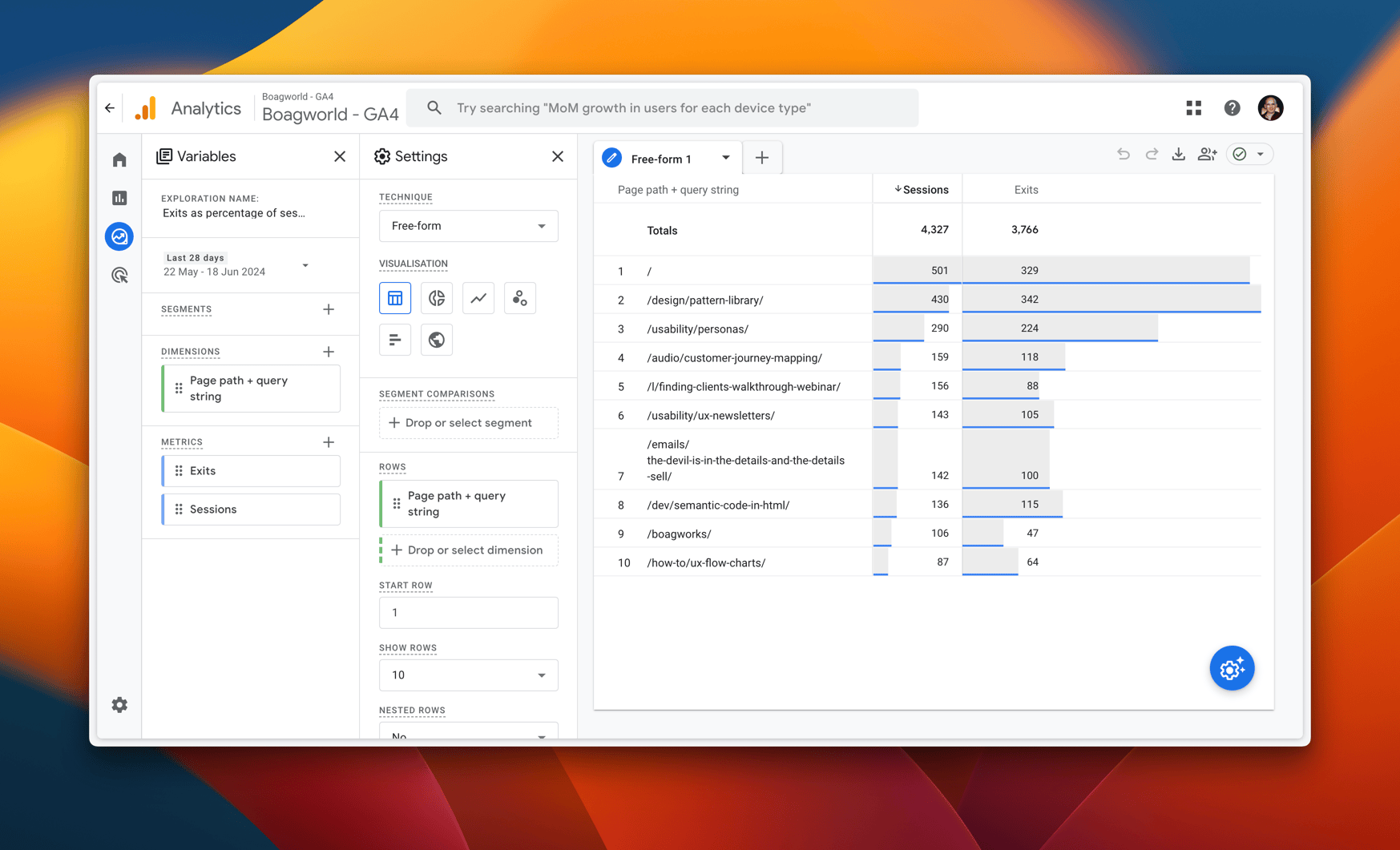

Analyzes can help here. Look for areas with high bounce rates or exit points. At these points, users drop out. Also look for low-performing conversion points. However, don’t forget to look at this as a percentage of traffic the page or feature receives. Otherwise, your most popular pages always seem to be the biggest problem.

To be honest, this is more cumbersome in Google Analytics 4 than it should be. So if you are not familiar with the platform, you may need help.

Not that Google Analytics is the only tool that can help; I can also highly recommend it Microsoft clarity. This free tool provides detailed user data. It includes session recordings and heatmaps. These will help you figure out where you can improve your website or app.

Pay particular attention to “insights” that show you metrics including:

- Anger clicks

Where people keep clicking on something out of frustration. - Dead clicks

Where people click on something that isn’t clickable. - Excessive scrolling

Where people scroll up and down looking for something. - Quick setbacks

When people accidentally visit a page and quickly return to the previous page.

Along with exits and bounces, these metrics indicate that something is wrong and should be investigated further.

Diagnosing the specific problems

Once you’ve found a problem page, the next challenge is to diagnose exactly what’s going wrong.

I usually start by looking at the page heatmaps, which you can find in Clarity or similar tools. These heatmaps show you where people are engaging on the site and potentially flag issues.

If that doesn’t help, I watch footage of people exhibiting the problematic behavior. Watching these meeting recordings can provide invaluable insights. They highlight the specific vulnerabilities that users face. They can guide you to possible solutions.

If the problem is still unclear, I may conduct a survey. I will ask users about their experiences. Or I recruit some people and do usability testing on the site.

Surveys are easier to conduct, but they can be a bit distracting and don’t always provide the insights you want. When I use a survey, I usually only show it at exit intent to minimize disruption to the user experience.

When I conduct usability testing, I prefer facilitated testing in this scenario. Although more time consuming to execute, it allows me to ask questions that almost always reveal the problem on the page. Normally you can only do testing with 3 to 6 people.

Once you have identified the specific problem, you can start experimenting with solutions to fix the problem.

Test possible solutions

There are almost always multiple ways to approach a given problem. Therefore, it is important to test different approaches to find the best one. How you approach these tests depends on the complexity of your solution.

Sometimes an issue can be resolved with a simple fix that requires some UI tweaks or content changes. In this case, you can simply A/B test the variants to find out which ones perform better.

A/B test minor changes

If you’ve never done A/B testing before, It’s really not that complicated. The only downside is that, in my opinion, A/B testing tools are massively overpriced. That means, Crazy egg is cheaper (although not as powerful) and it comes with a free tier VWO.

Using an A/B testing tool starts with setting a goal, such as adding an item to the shopping cart. You then create versions of the page with your proposed improvement. These are shown to a certain percentage of visitors.

Making the changes is usually done through a simple WYSIWYG interface and only takes a few minutes.

If your website has a lot of traffic, I recommend you explore as many possible solutions as possible. If you have a smaller website, focus on testing just a few ideas. Otherwise, it will take forever to see results.

Additionally, for sites with lower traffic, keep the target as close to the experiment as possible to maximize the amount of traffic. If there is a large gap between the goal and the experiment, many people will drop out during the process and you will have to wait longer for results.

Not that A/B testing is always the right way to test ideas. If your solution is more complex, includes new features or multiple screens, A/B testing doesn’t work well. Because A/B testing at this level of change requires you to build the solution effectively, which negates most of the benefits that A/B testing offers.

Create prototypes and test major changes

Instead, the best option in such circumstances is to create a prototype that you can test remotely.

In the first case, I tend to do unsupervised testing using a tool like… labyrinth. Unassisted tests are quick to set up, take little time, and Maze even provides you with success rate analytics.

However, if unassisted testing finds problems and you have doubts about how to resolve them, then consider facilitated testing. This is because facilitated testing allows you to ask questions and get to the heart of any problems that may arise.

The only disadvantage of usability testing over A/B testing is recruiting. Finding the right participants can be difficult. If this is the case, you should consider using a service Questionablewho will carry out the recruitment for you for a small fee.

If this isn’t the case, don’t be afraid to reach out to friends and family, as in most cases identifying the exact demographic is less important than you might think. As long as people have comparable physical and cognitive abilities, you shouldn’t have a problem. The only exception is if the content on your website or app is highly specialized.

However, I would avoid hiring anyone to work for the organization. You will inevitably become institutionalized and unable to provide unbiased feedback.

No matter what approach you use to test your solution, once you’re happy, you can push the change live to all users. But your work is not done yet.

Rinse and repeat

Once you’ve solved a problem, return to your analytics. Find the next biggest problem. Repeat the entire process. As you fix some problems, more will become apparent and you will quickly find that you have an ongoing program of improvements that can be made.

The more you do this type of work, the more obvious the benefits become. You will gradually see improvements in metrics like engagement, conversion, and user satisfaction. You can use these metrics to present management with arguments for continuous optimization. This is better than the trap of releasing one feature after another without regard to their performance.

Learn about fast and affordable user research and testing

If interested User research and testingCheck out Paul’s workshop Fast and affordable user research and testingStarts July 11th.

Live workshop with practical examples.

5-hour live workshop + friendly questions and answers.

(cr, cm, il)

[ad_2]

Source link